Publications

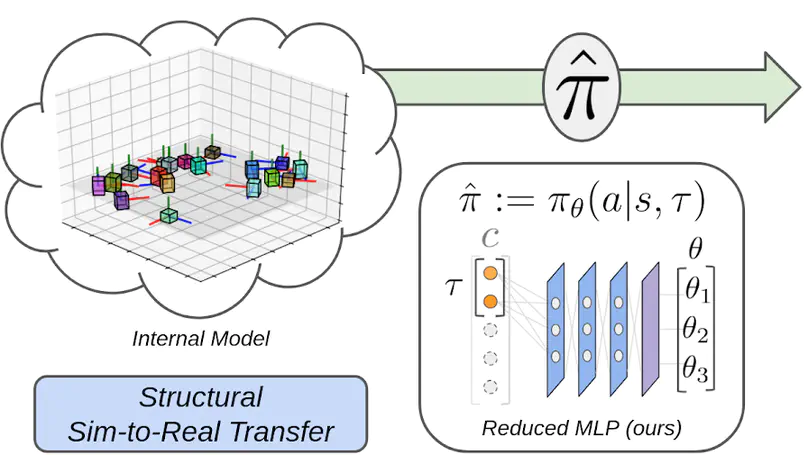

We present CREST, an approach for causal reasoning in simulation to learn the relevant state space for a robot manipulation policy. Our approach conducts interventions using internal models, which are simulations with approximate dynamics and simplified assumptions. These interventions elicit the structure between the state and action spaces, enabling construction of neural network policies with only relevant states as input. These policies are pretrained using the internal model with domain randomization over the relevant states. The policy network weights are then transferred to the target domain (e.g., the real world) for fine tuning. We perform extensive policy transfer experiments in simulation for two representative manipulation tasks: block stacking and crate opening. Our policies are shown to be more robust to domain shifts, more sample efficient to learn, and scale to more complex settings with larger state spaces. We also show improved zero-shot sim-to-real transfer of our policies for the block stacking task.

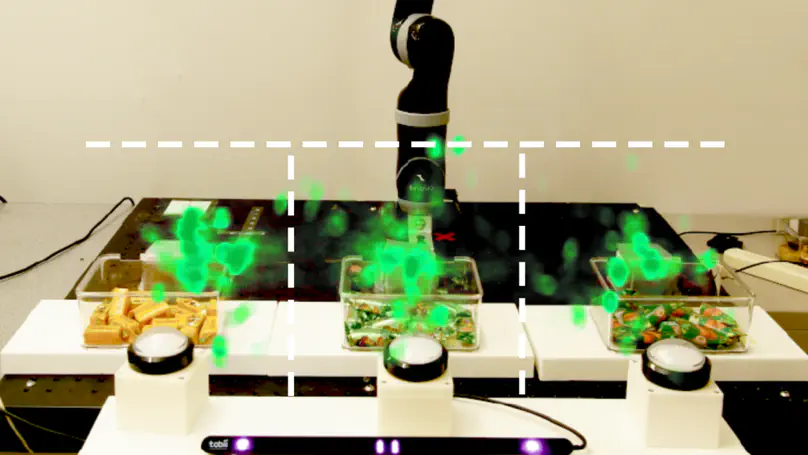

Collaborative robots that provide anticipatory assistance are able to help people complete tasks more quickly. As anticipatory assistance is provided before help is explicitly requested, there is a chance that this action itself will influence the person’s future decisions in the task. In this work, we investigate whether a robot’s anticipatory assistance can drive people to make choices different from those they would otherwise make. Such a study requires measuring intent, which itself could modify intent, resulting in an observer paradox. To combat this, we carefully designed an experiment to avoid this effect. We considered several mitigations such as the careful choice of which human behavioral signals we use to measure intent and designing unobtrusive ways to obtain these signals. We conducted a user study (N= 99) in which participants completed a collaborative object retrieval task: users selected an object and a robot arm retrieved it for them. The robot predicted the user’s object selection from eye gaze in advance of their explicit selection, and then provided either collaborative anticipation (moving toward the predicted object), adversarial anticipation (moving away from the predicted object), or no anticipation (nomovement, control condition). We found trends and participant comments suggesting people’s decision making changes in the presence of a robot anticipatory motion and this change differs depending on the robot’s anticipation strategy.

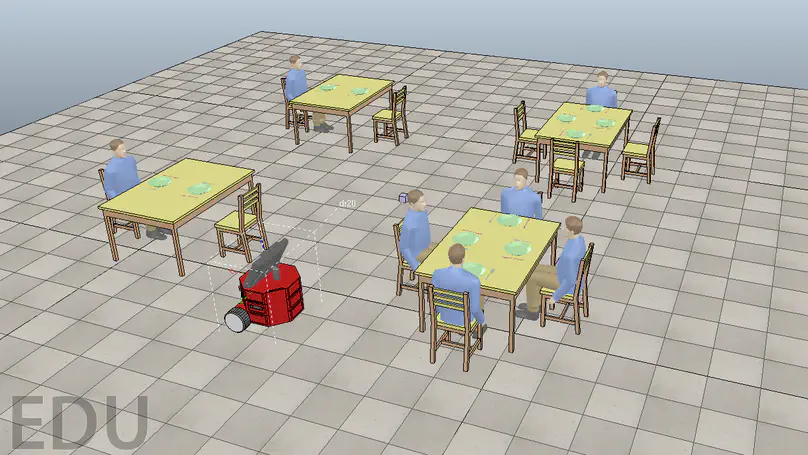

The goal of this work is to train a model to quantify mental states such as neediness and interruptibility from human action patterns in restaurant scenarios. Our long-term vision is to develop robot waiters that can intelligently respond to customers. Our key insight is that human behaviors can both actively and passively communicate customers’ underlying mental states. To interpret behaviors indicating neediness and interruptibility, we automatically label key moments of human service patterns in restaurants based on waiter location and objects, as well as human behavior patterns in terms of pose, facial expression, and facial action units. Our effort to build a model is complicated by a lack of ground truth information, unreliability in waiter actions, and the effect of distractions and non-service social interactions on customer signals, and we propose solutions to each of these issues. We plan to compare the performance of several machine learning methods in predicting moments when waiters attend to customer needs based on this model.